The World of Embedding Vectors

17 Apr 2023 Comments

I’ve long been curious but hesitant about using embedding vectors generated from pre-trained neural networks with self-supervision.

Coming from a brief but intense computer vision (CV) background, I can still remember the first bunch of deep learning tutorials were MNIST handwritten digits classification and ImageNet classification. Supervised learning with class labels, softmax, and cross-entropy loss was the standard formulation. Arguably it was facial recognition that pushed this formulation to the extreme. With tens of thousands of identities in the training set, telling the difference between them with certainty became difficult with the standard loss function. So instead of using softmax and cross-entropy loss, practitioners resorted to the contrastive loss formulation, such as triplet loss in the FaceNet paper. The intra-class features from this method were grouped together, while inter-class separated afar, exhibiting strong discriminative power.

However, it was not able to make efficient use of the highly parallel architecture of GPUs. Soon after, a series of modified softmax-based loss formulations proved to be equally as effective, and were friendly to the nature of GPUs. One would apply a margin to the positive softmax term to push the intra-class features more tightly grouped and inter-class ones farther apart, leading to a similar discriminative effect to the triplet loss. This culminated in the ArcFace paper. In addition, to prevent the fully connected (FC) layer for classification from becoming too large (scales with N of classes) to fit into the modicum GPU memory, some model parallelism was worked out to get it evenly chunked onto multiple GPUs with just a small amount of inter-device communication. With the abundance of interconnected GPUs, suddenly millions+ of identities were within line of sight. The facial recognition models trained using this approach were able to tell apart the world’s population, past, present, and future.

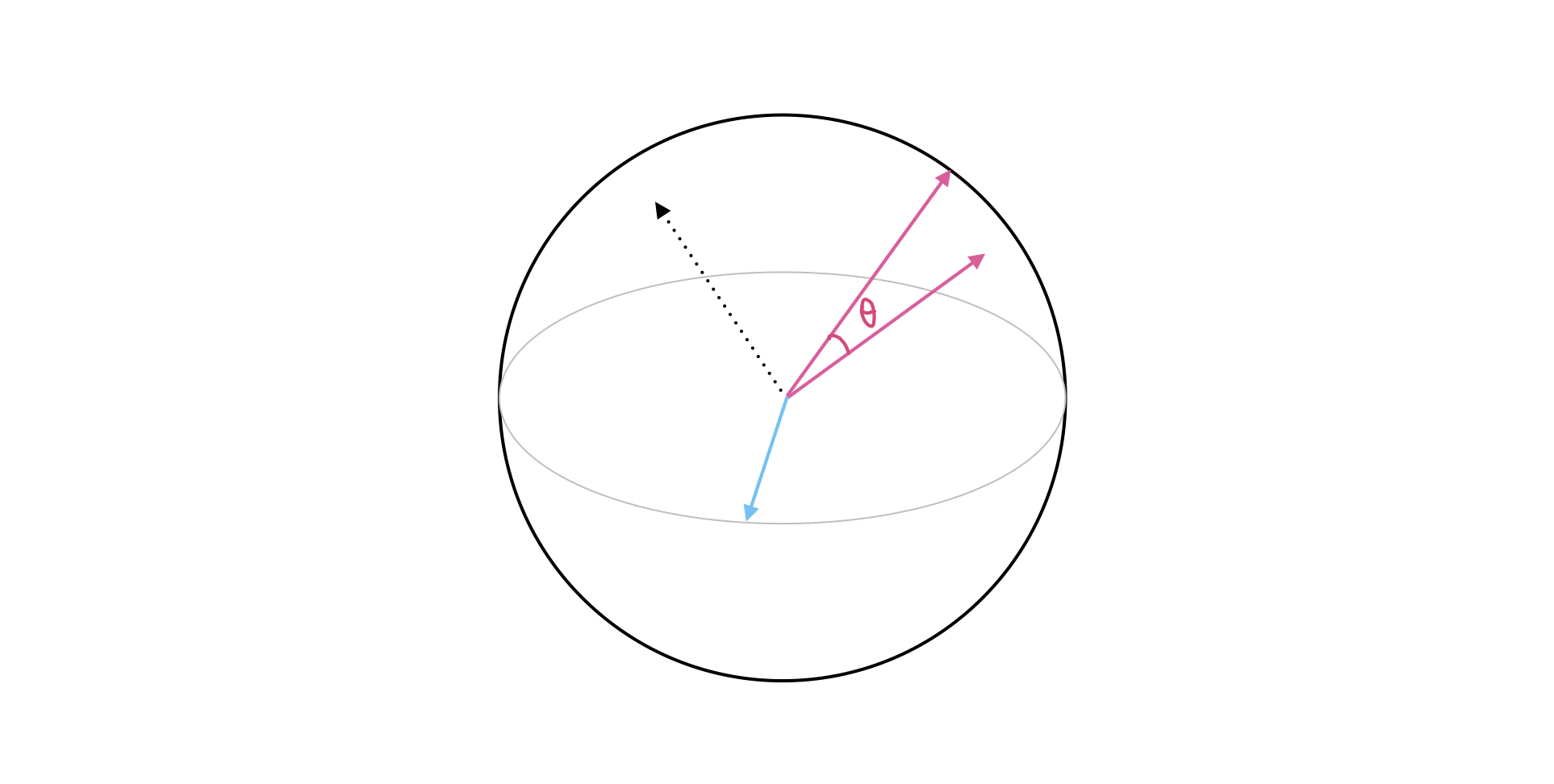

You could use the embedding vectors from these neural networks to compute cosine similarity scores to find someone in a vector database, and they would do a fantastic job. The networks were trained with definitive ground-truth labels for a single task: a face either belongs to a certain unique person or not; there is no middle ground. However, not all vectors are born the same. In contrast, for general vision or language tasks, an object could belong to multiple conceptual categories: a “chihuahua” is a “dog”, also an “animal”, oftentimes a “pet”. This gives ambiguity to the concept of similarity. Depending on the training process, be it supervised with explicit class labels, or self-supervised with contrastive pre-training, you would end up with entirely different statistical distributions in the high-dimensional space. Without domain-specific fine-tuning, these embeddings are simply not trained with the explicit and singular purpose of telling things together or apart. Expecting a single number, such as cosine similarity score, to capture the nuances of relations doesn’t seem to make too much sense.

With the progress in large language models (LLMs) that make me feel like a dinosaur every few months, the field of information retrieval and search relevance got a new player in town - embedding vectors. Recently it’s all the hype because it is supposed to be the cure for LLMs’ hallucination problem. By embedding text into vectors and finding top-K similar ones, one limits the scope of LLMs’ mental space, leading to better fidelity. That’s fine. However, what does not get often talked about is in what granularity one should create embeddings, sentences, paragraphs, or articles. Neither too short nor too long is effective. Also, out-of-domain usage is not competitive against the decade-old BM25 baseline. Moreover, is K-nearest neighbors (KNN) based solely on similarity scores the right method? People even find support vector machine (SVM) to deliver more meaningful search results than KNN. You shouldn’t use embedding vectors without going through these thoughts. That’s the point I’m trying to make.

How does this apply to programming code? As a subset of text, it has unique properties and applications. Being able to ask computers to code on their own is a cornerstone for scaling up intelligence. Code is more logical and follows stricter syntactical rules. It’s easier in some ways, and harder in others. We’ve seen the success of GitHub Copilot, so in terms of generative tasks, completing the function, and adding docs, it does a decent job. How well does it embed all of that information into vectors for similarity comparison? I had the chance to look into this the past few days. Will do more and write about it. Please stay tuned!