My Programming Journey

Mar 20, 2023

I originally intended to leave this as a short paragraph, in the middle of a thought piece after learning Rust for a week. But the abbreviated turned fully expanded. So here it is.

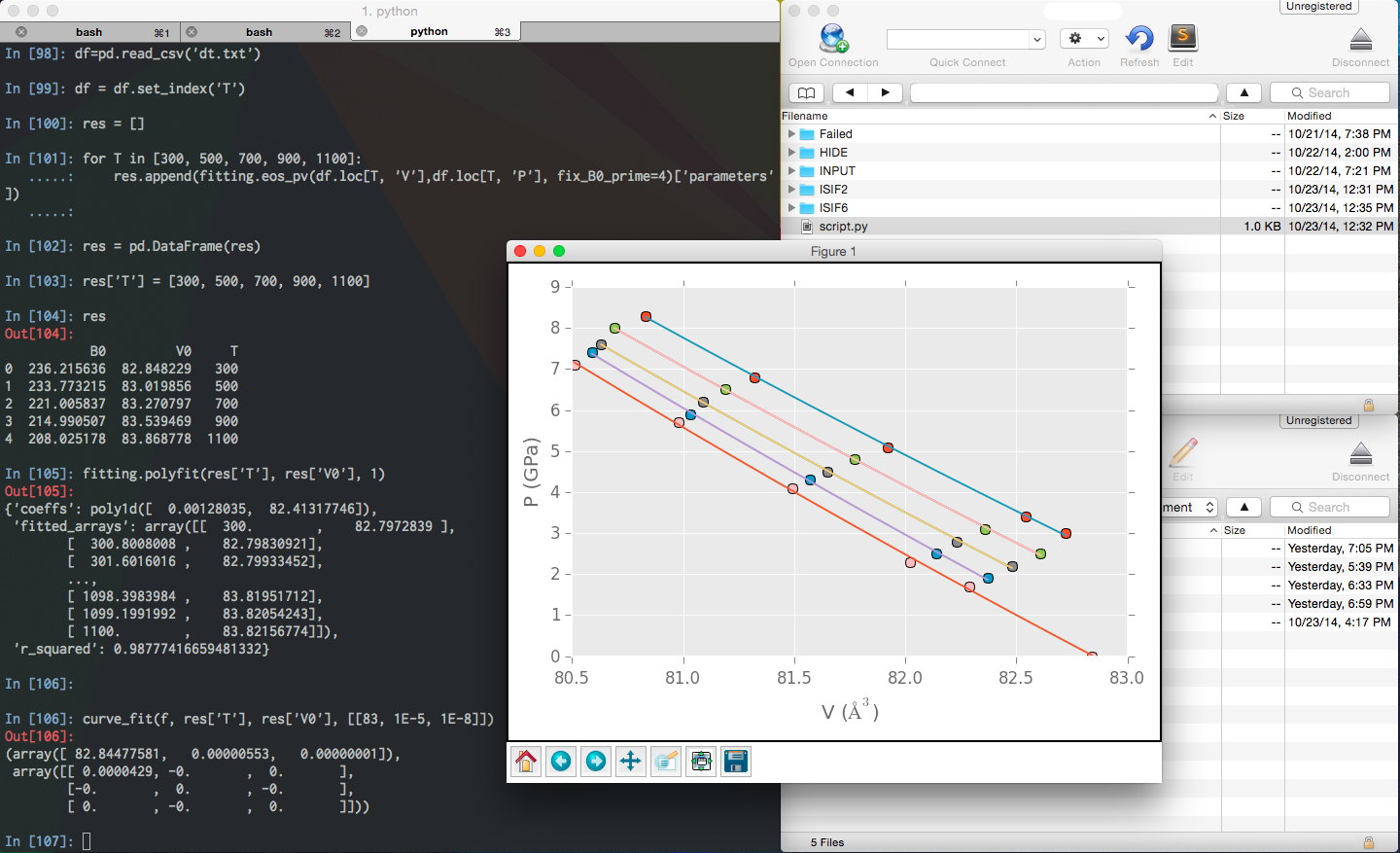

This is my personal programming journey. I learned a tiny bit of Pascal in high school around 2005, but focused more on playing Counter-Strike and making maps (more productive than being just a gamer?). We were required to learn C in college, but I hated it because exams were intentionally trying to trick us into memorizing syntax quirks. I started seriously learning Python (with A Byte of Python) and Linux in 2012 in grad school for scientific computing needs. It was also around that time when the Python scientific ecosystem really started to flourish, with data science and machine learning on the rise, attracting a lot of cash in the industry. Python is a very intuitive language with plain English-like syntax (progressive disclosure of complexity) and easy-to-expect behaviors. It abstracts much complexity away from novice users, so they won’t have to worry about resource management and automatically get memory-safety guarantees. Its standard library and ecosystem are vast, and you can almost always find something other people created and start using them for your needs after simple installation steps. It accomplishes these tasks at the cost of performance - it is dynamically typed with an interpreter, garbage collected, and comes with a global interpreter lock (GIL for the dominant CPython at least). Many workarounds include interfacing with a lower-level language (C/C++) to get intrinsic speed and multithreading without the GIL. With enough care, you can usually get around the bottlenecks at hand and find new bottlenecks to be something else in a compute-centric system. Python is a T-shaped language.

These properties ushered Python all the way into the era of AI and made it the de-facto language for deep learning. You even see this pattern being played out in different frameworks built within Python. What made PyTorch the dominant framework for AI researchers was that it prioritized intuitiveness and flexibility over performance with eager execution. The framework maintainers then optimized the performance with lower-level graph and compiler techniques. These considerations (abstraction of complexity and division of labor) of Python and PyTorch enabled deep learning researchers who weren’t fluent with less intuitive languages & frameworks, and liberated those who wouldn’t want to be slowed down by them while engaging in domain problems.

Before getting ahead of myself, during grad school years I also learned Java from a book I borrowed from my roommate. It was also the language Algorithm & Data Structure courses typically used to teach, so resources were abundant. The garbage collection and pass-by-assignment were similar enough to Python, so it was just adding static typing and JVM to my mental programming model. However, because I was not actively trying to build commercial software, the Object Oriented verbosity deterred me from moving at full speed.

After graduating from grad school in the summer of 2017, I had a brief learning phase with Swift at a coffee shop. However, I wasn’t much of a fan of mobile programming, so I mostly just read the guide across the span of a few days and focused on comparing it to what I knew best, Python. What stood out to me was that for value types (e.g. structs) in function signatures, you would copy the entire object instead of getting a pointer-like variable for reference types (e.g. classes). In Python or Java, this copying behavior only applies to “primitive” types. The optional unwrapping had some funky syntax, and avoiding reference cycles due to using automatic reference counting (ARC) was worth paying attention to. Other than that, I never had to think about resource management or pointers (of course, they exist in the “unsafe” section).

That summer, I accepted a job offer at Bloomberg. On the job, I took C++ seriously because system programming with OpenSSH and PAM modules demanded it. Of course, you could do it in C, but why not C++ if you could use more modern syntax and libraries? It was a nurturing environment for C++ because NYC finance shops were all about correctness and extracting performance, so I took the time to learn about resource management and caveats about to-dos and not-to-dos. Not having a standard package management system was definitely a deterrent, so I ended up installing a lot of stuff into Docker containers’ system paths and checking out code ad-hoc from GitHub. Reading C++ documentation hurt my head, but I had done more persistent reading into lengthy manuals in grad school, so I persevered. It was relatively easy to go along with an existing project and contribute to it by osmosis, but a lot harder to take full advantage of progressively more C++11/14/17/20 features on top of existing complexities. I could sense they seemed necessary though, because only by using the newer syntaxes could you express more naturally what you would say in Python or Swift. I got sucked into the world of smart pointers, rvalue references, and move semantics. However, it was such a big language with a lot of existing code, and there were just too many ways of doing the same thing with slightly different trade-offs. But when it complied successfully and ran without problems, it felt all the pain was worth it.

Since my job was on backend systems and platforms, something was missing between flexible but slow Python and efficient but complex C++. These network-connected backends and platforms relied heavily on Web API-driven designs and multithreaded execution. A language was practically born for the job - Go. It is intentionally simple, with an amazing package manager and documentation hub. It is very fast and effortlessly supports concurrency/parallelism with goroutines. All those languages I had learned then followed a path towards more complexity, from Python to Java, to Swift, and to C++. But Go does not adhere to the popular Object Oriented norm, preferring interfaces. You also get ease of use with garbage collection, easy-to-return slices and maps without excessive copies. Choosing between Python and Go for a web server boils down to whether you want more features from Python’s vast ecosystem or high concurrent throughput demand and low memory usage.

I kept using Python and C++, occasionally Go for a few more years. And the magic soup started turning - there is something to say about the mental maturing process as a programmer. You start as a novice, surprised and daunted all the time. You keep learning and become mid-level decent at the craft, having seen just enough to say, “everything is pretty similar.” Then your experience deepens, and everything is nuanced and complex again - different languages reflected the times when they were born, the problems they aimed to solve, and they dabbled into an ingenious pool of building blocks, some common, some less so.

Now is the time for some Rust.