Individual Drive and Organizational Layout in the Age of AI Agents

Feb 1, 2026

Many people are talking about the power of newer and newer AI agents, and what’s SOTA today is expiring tomorrow. However, many have also observed that AI in automating knowledge work has crossed some sort of boundary of late e.g. Karpathy, Cherny (creator of Claude Code), like the uncanny valley has been crossed. Maybe it was just Opus 4.5, which materially improved beyond the last frontier. Maybe all of those models have improved due to post-training agentic calibration towards practical tasks. From a technological progress standpoint, this may just be one small step towards the eventuality that everyone has been working for years. But it signals some kind of maturation, so it could finally be highly depended upon en masse. Most individuals, and most companies, even the ones actively adopting AI into their people’s daily work lives, have not fully embraced or benefited from this new paradigm. I’m trying to draw some metaphysical points from firsthand.

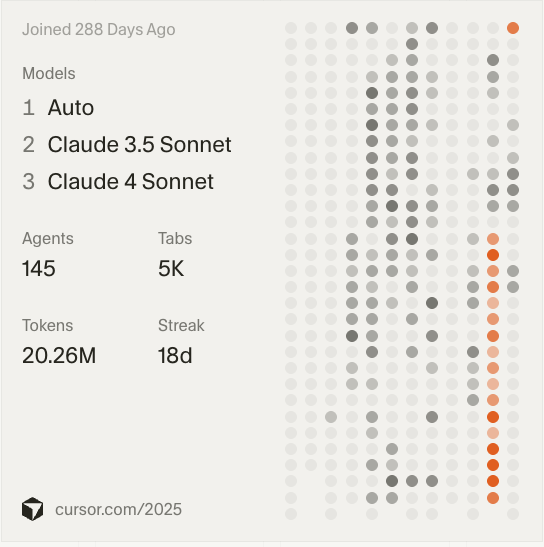

A brief history. My work has been mostly in the backend, ML, and data. Back in 2022/2023 when Copilot and Cursor were released to the public, people were transitioning from more intelligent auto-complete in control to getting a lot of shock and awe from AI coding out entire UIs. I started learning Rust in early 2023, but AI wasn’t very helpful, given its limited exposure to the language on the Internet and limited reasoning abilities. My first big project in Rust was at the end of 2023, and it lasted a couple of months. I remember when approaching mid-year 2024 that AI got a lot more helpful. My two primary modes of AI usage were still quite limited then - I would have Q&As on questions that could help my own learning in Claude.ai, or I would use the increasingly more powerful auto-complete in the IDE. I only started using Cursor at the beginning of 2025 for its more powerful (and trigger-happy) auto-complete, and still did not appreciate its agent mode. Coding agents moved my code and files too much without precision, and the unwanted creativity wasn’t worth giving up control for. In other words, I was a Big Tabber.

Somewhere in 2025, my product-focused colleagues were incorporating AI agents more into their workflows in experimental ways, creating guiding prompts and plans, and sometimes slowing down noticeably because they ended up waiting on AI to delegate their tasks to, and each iteration took a lot of “thinking”. It was also a time when people started to complain that vibe-coded code was getting a little overwhelming at MR/PR review time1.

The scale really got tipped at the end of 2025. I noticed myself using AI agents in Cursor a lot more, and started using Claude Code, because

- they were able to make more pinpointed changes, keeping me in control, and

- when they made bigger and cross-file changes, they tended to be more often correct, thorough, and insightful.

The boundary of trust has been crossed. I would ask an agent to do a few things:

- generate me some NEON SIMD code to match the AVX2 and AVX-512 counterpart and reason through the lines (and let Claude blind-check then cross-check it).

- find me all references of some Terraform

minSizein different formats across the repo that were hard to replace-all. - recreate some commits preserving timestamps and authors by digging into the reflogs.

- quickly examine all the Alembic heads that got migrated in a botched migration, look at the migration files, figure out the damage, and decide whether to rollback or go forward.

Once they showed that they were working through the problems and cross-checking, I knew I needed to do less of it. And man, they were tenacious (programmed to be, but uncannily alive).

The intuition from using AI in their knowledge work for some time is, if you give it the right context (enough, but not too distracting), the right directive, in a finite form with the possibility to iterate in a close feedback loop, AI would do a great job. AI is both a much better generalist (we knew that for a while now) than people, and a much better specialist (more recent refinements) in highly symbolic and localized settings (like juggling with each hardware vendor’s SIMD functions). Just in terms of automating sophisticated knowledge work, we blasted through the stochastic parrot era, and gave LLMs expressions and tools for thinking and reasoning, unique to their nature. World models are still not here, but in the semantic space of human & machine languages, LLMs flow like fish in the water. This was one of the key insights that allowed NLP to accelerate so much faster than computer vision - compared to the physical world with strict laws and coherence rules (checked by the universe in real time), the world of languages is a flatter projection. Humans are not even that good at them! We make mistakes talking and writing, and certainly need to spend time with programming to get logical flows internalized. In the evolutionary chain of events, mastering languages was the defining character of humans. However, we can only take pride in that when compared to animals, not this new AI machinery, because we made them, and distilled the refined versions of ourselves into them. So now they can write, talk, and do a lot more complicated things following the explicit and latent flow of languages.

Think about this, and think about what individuals now can use AI agents for. In this article, the author documented the lengths agents would go to accomplish the directive. Excerpt:

So Claude Code got creative. It downloaded a Python audio analysis package and essentially listened to its own output, dissecting the waveform.

So much exploratory work dealing with unknowns and unknown unknowns is compressed and accelerated into a session with AI & agents2! There is no excuse anymore for hand-waving “this is an unknown risk” (thus taking more time to figure out). You just didn’t get into a chat with your AI friend, or an exploratory session with agents. The bottleneck is on YOU. Specifically, it is on your driving capacity, and mental plasticity. Just like modern cloud machine vertical scaling and memory-safe languages are accelerating data engineering within an individual’s reach, AI is accelerating all knowledge work.

The best kind of person this benefits is someone who is driven, deliberate, and introspective with the bigger picture. The drive now can be amplified to an unlimited ceiling. The deliberation means they have specific goals with nuanced needs, that require meticulousness and precision. The introspection with the bigger picture means they keep organizing and creating enough empty space in their mind to accommodate new knowledge as AI shoves them in, and convert it to make it their cohesive own. AI encourages, enables, and fosters the entrepreneurial side of people.

Now let’s consider another angle. You have someone new to your company, and want to assign them work, either as their manager, or as their senior mentor/peer. What’s the best way to do it? To start, they need a lot of context. They would spend days and weeks to get onboarded and gain deeper familiarity with your company’s stack, absorb that institutional knowledge, and start contributing to a small thing, then a medium thing, then hopefully bigger things. They would spend a good few hours just taking in the density of one interesting tutorial, RFC, or blog post. They get tired quickly after just a few hours, and have to manage the stress talking to others, asking questions trying not to sound like an idiot, or bringing up proposals trying not to get someone mad. You would need to sit in with them, or better, talk through lunch and dinner many times to point and advise so they can do as good a job, if not better than yourself. This is of course all enjoyable if you enjoy it, but tedious if you do too much just to scale the company ASAP.

Longer term, you would need to create an org structure that reflects the boundary constraints of human attention and communication limitations. Your company might just look like a big Hadoop cluster, with being bandwidth-bound as the usual struggle case. It is helpful on the margin if you really need to get +1 thing done, but scaling is extremely sublinear, and at some point, offers negative benefits. Scalability! But at what COST?

Vertical scaling solves this. If you could consolidate more resources per individual, boost up their capabilities, communication overhead would decrease accordingly. It improves locality, and avoids passing big contexts around. The ZIRP era is closing out, and even if it makes a comeback, the fundamental unit of productivity is drifting towards higher capabilities of individuals, instead of merely more of them.

The best kind of organization this benefits is one that identifies, mentors, and empowers individuals to take whole-cloth scopes, be it functional or divisional. These will be inert from AI automation until robots become first-class citizens, because fundamentally, you want to find people that take care of things, bear the responsibilities, so you don’t have to worry about them. Functional roles like engineering, product, sales, marketing need at least one person to drive them for the long haul, do what the AI can’t and get the job done. At a human coordination level, there is additional context hard for AI agents to pull in. Big divisions target categorical customer segments, also for a stable amount of time with domain-specific requirements, which also have additional context. In other words, cut the org boundaries at how much of the physical world the tasks demand, appoint people, and enable them to scale AI agents to fit the newly broadened individual capability boundaries.

-

You should totally put Claude Code into your MR/PR review process. It sits in the right context (the diff, description & comments), and helps with reviewer’s understanding. Done well, it’s not just a cynical game of AI reviewing AI. ↩

-

There is an aside on agent plan of action’s serial nature, clashing with GPU chips’ high throughput but high latency nature. This led to NVIDIA’s choice on licensing Groq. There is also the “acquiring the competitor” angle, as has been lately revealed. ↩