I build machine learning models, vector search engines, and large-scale data systems. With a background in computational physics, I am interested in performance computing and the hardware, language, and software advances that shape systems at scale.

I work closely with production systems and teams, focusing on changes that improve outcomes. I aim to understand how systems are actually used before evolving them, so performance and simplicity compound rather than fight the organization.

In this blog, I write about the technical and the organizational, where implementation meets strategy. Early in my career, I optimized for performance. Now I optimize for decisions.

EXPERIENCE

Clearview AI

Interim Head of Engineering, 2025

Head of ML / Principal Engineer, 2021 - 2025

I had previously worked on computer vision / facial recognition back in 2017, and really wanted to solve the two biggest problems in the industry - the accuracy of the algorithm, and the accuracy & scalability of the vector search engine at increasingly large sizes (deca-billion scale and more). I ended up solving them in the first two years. Further, I grew a lot more, both into technical systems engineering and scaling engineering goals & operations.

- Created a facial recognition algorithm ranked US #1 and World #2 (time of submission) by NIST (updated in 2024).

- Researched & implemented a 70B vector search engine that has proven its value. Fused high-profile open-source C++ libraries and created Python bindings. $MM annual savings.

- Achieved SOTA accuracy in the domain category with efficient model training orchestrating a cluster of nodes. Became early adopters of alternative chips, e.g. Intel Habana Gaudi2.

- Drove model efficiency using distillation training, quantization, pruning/trimming & hardware acceleration. Streamlined deployment with model encryption & serving engines. Early user of the NVIDIA stack - TensorRT/Triton.

- Established foundational ML/data practices & native (C++ & Rust) tooling for performance-critical tasks.

- Collated 5PB of small objects on S3 to accelerate future batch processing, and avoid vendor lock-in.

- Designed & implemented multiple compute-intensive data pipelines & inference infra for images/videos, pulling at 150 Gbps DC-scale throughput. $100k cost reduction per recurring batch job.

- Consolidated infra and renegotiated with vendors, leading to 40% drop of infra COGS.

- Revamped team processes and executed on hiring of key divisions.

- Open-source work: msgpack-numpy-rs, sulfite, gitlab-migrator

Bloomberg LP

Senior Software Engineer, Platform, 2017 - 2021

I became more equipped with key data structures, system design patterns, essential tools, and frameworks.

- Maintained widely-used bare-metal SFTP infra with 7M daily logins.

- Designed & implemented reliable account management, auth, routing, caching, messaging for cloud-based next-gen SFTP. Wrote OS-level modules and web servers for auth interfacing with OpenSSH. Managed deployment on internal Kubernetes-based PaaS.

- Leveraged S3 Storage as SFTP subsystem with file system emulation and cross-data-center replication & failover.

- Spearheaded successful multi-year high-stake account migration as technical lead.

University of Toledo

Ph.D., Computational Physics, 2012 – 2017

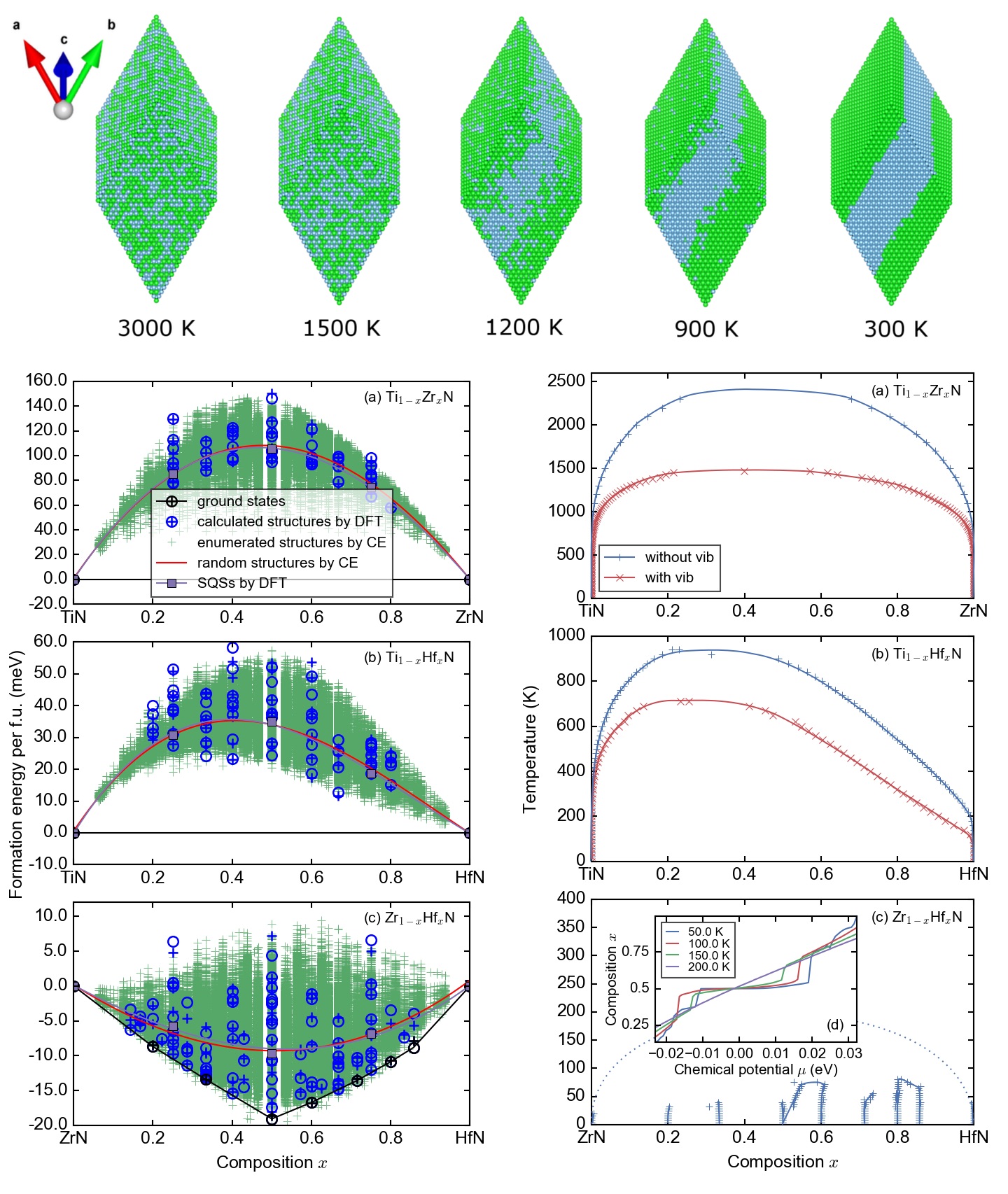

I spent four of the five years here as a funded researcher working on First-Principles materials simulations with Density Functional Theory on supercomputing clusters. It was an exciting field that only became feasible after ~50 years of advances in supercomputing since WWII… not just quantum mechanics theories anymore. However the tooling around the core Fortran software package VASP was very scarce, and I had to be very proactive about what to learn and build. This journey taught me a lot of things, resilience being the most valuable aspect of it. Because of the fundamental similarity in problem scope (iterative optimization), working approach (scientific experiments) and technical requirements (big computers), it naturally paved my way to the growing Data Science and Machine Learning fields, and as soon as GPUs became more available, Deep Learning.

- Specialized in materials simulations with parallel computing clusters (papers).

- Calculated electronic ground states with gradient descent & residual minimization schemes, (non-)linear regression - similar routines as in ML/DL frameworks. Had various classical ML projects.

- Open-source work: ScriptsForVASP, pydass_vasp, pyvasp-workflow

- Built a materials database website with a modern stack, supporting tabulation/graphing, user auth/contribution.

Dissertation

Dissertation on material properties prediction with cluster expansion regression models

Predicting properties from atomic configurations with linear models, cross validation and selection (cluster expansion formalism).

NOTE: if you would like to plan for a transition from PhD to the SWE/ML/AI industry, prepare early, and read A Survival Guide to a PhD from Andrej Karpathy and the HN discussion.

Nanjing University

Bachelor of Science, Materials Science & Engineering (Materials Physics), 2008 – 2012

This major was cross-disciplinary in nature. As a result, I studied a broad range of subjects: materials science & engineering, physics, chemistry, electrical engineering, computer science, math, statistics, etc. I also prepared and participated in a mathematical modeling contest with a team that focused on operations research.

TALKS

Migrating 50TB Data From a Home-Grown Database to ScyllaDB, Fast

(2025-03-04)

Terence shares how Clearview AI’s infra needs evolved and why they chose ScyllaDB after first-principles research. From fast ingestion to production queries, the talk explores their journey with embedded DB readers and the ScyllaDB Rust driver, plus config tips for bulk ingestion and achieving data parity.

The Rust Invasion: New Possibilities in the Python Ecosystem

(2024-10-10)

I presented at the local MadPy meetup group on the new possibilities in the Python ecosystem in light of recent development in the Rust world. Spent some time curating those links, so they may be worth a shuffle!

MEDIA

(2023-06-28)

As explained in a company blog post by Liu and expanded on in conversation with Biometric Update, Clearview believes the smarter way is to index vectors so only small portion needs to be searched. This means “you can effectively search only a small portion of the database, finding very highly likely matches,” Liu says.

Blog Post: How We Store and Search 30 Billion Faces

(2023-04-18)

The Clearview AI platform has evolved significantly over the past few years, with our database growing from a few million face images to an astounding 30 billion today. Faces can be represented and compared as embedding vectors, but face vectors have unique properties that make it challenging to search at scale.

(2022-08-25)

Clearview Vice President of Research Terence Liu explained to Biometric Update in an interview that face biometrics algorithms are trained by ingesting several images from each subject, and then organizing data from ingested images into “clusters” with other images from the same subject.

NYT: Clearview AI does well in another round of facial recognition accuracy tests.

(2021-11-23)

In results announced on Monday, Clearview, which is based in New York, placed among the top 10 out of nearly 100 facial recognition vendors in a federal test intended to reveal which tools are best at finding the right face while looking through photos of millions of people.

NYT: Clearview AI finally takes part in a federal accuracy test.

(2021-10-28)

In a field of over 300 algorithms from over 200 facial recognition vendors, Clearview ranked among the top 10 in terms of accuracy, alongside NTechLab of Russia, Sensetime of China and other more established outfits.